Looping through field values in Pipeline

I have a record that contains a text field with multiple values separated by commas (which I can also turn into a multi select field if that makes things easier). I want to loop through the values in the field and within each loop perform a QB record update. In pipelines, I understand how I can perform a loop based off a list of records, but in this case I need the loop to be based off a list of values in a field.... any way to do this? Thanks. ------------------------------ Jennason Quick Base Admin ------------------------------1.2KViews0likes22CommentsPipeline Make Request - Return Make Request JSON Output into fields

** Update: I managed to retrieve the ticket ID by using {{c.json.ticket.id}} The only one which remains is to do the same for the users, though that is not reading the value when formatting the jinja the same as the ticket request, even though the structure of the .json is the same. Hi Everyone, I have a Make Request, which has an endpoint which is pointing to our Zendesk instance: https://instance.zendesk.com/api/v2/users/search.json?query=email:{{a.email}} As you can see above, I am using the email address which is stored in the record field a.email - this checks if this end-user exists on Zendesk. After running the pipeline, it returns the following in the Output (I've redated extraneous data) { "users":[ {"id":13617301225116, "url":"https://instance.zendesk.com/api/v2/users/13617301225116.json", "name":"Nick Green", "email":"redacted@gmail.com" ]} extra data redacted } In the above users array it has the "id":13617301225116 value which I would like to send back to the Quickbase record to populate a text field by using an Update Record action in the pipeline. I use jinja in an attempt to extract the specific value: {{c.content.users.id}} - however this returns a null value. When sending the entire output to the field by just using {{c.content}} I get the proper json structure, though for some reason it seems that jinja is not parsing the returned output to extract the "id" value. Using {{c.content.users.id | tojson}} doesn't work and returns an error: Validation error: Incorrect template "{{c.content.users.id|tojson}}". TypeError: Undefined is not JSON serializable I also checked in with ChatGPT and it recommends using {{c.content.users.id}} Has anyone been able to successfully do the above? Cheers, NickSolved745Views0likes2CommentsPipeline to remove file attachments

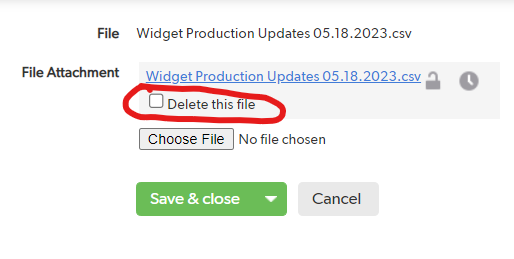

I am trying to investigate if there is a way to use a pipeline to remove file attachments? I am automating a process to incorporate data into our system using CSV uploads. The issue is that I would like to keep some of the information from the upload available (e.g. the Requestor, Date Uploaded, File Name) but I don't want to actually archive the file on QuickBase due to space limitations. Is there a way to just delete the file itself without deleting the entire record? You can of course click into the file and manually delete it (see below), but I want this to be automated as I won't be doing the uploads myself. The field type is "file attachment" and it appears to be stored as a URL/Link. It does not behave like other fields in the pipeline and I have not found a way to override it yet. Thanks! ------------------------------ Kevin Gardner ------------------------------717Views0likes16CommentsJinja Code to Check for Empty String

Hello, I received technical support from QB to modify a Pipeline that looks for dates when several conditions are met. The automation searches previous records for the same Employee name, same Topic and Same Category. If any of those records have a date in the Coaching Counsel Field Enter the most recent two into the Coaching_pipelineText field into the new record that also has the same Employee Name, same Topic and Same Category. Currently, the pipeline works when there are available dates. However, when there are no dates I receive the Pipeline Error email. In trying to rectify the issue with QB they state I should do the following: The error you encountered can be addressed using a Jinja conditional statement to clear the field or to input an empty string ("") when the JSON is blank and the data from the JSON when it's available. Please refer to the article below. The Update code currently is this: I am unsure on how to write the Jinja If statement to accomodate for the event that the is either No dates or maybe only one date that meets the criteria. Would someone help me write the Jinja statment to make this work? Brian709Views0likes1CommentPipeline Iterate over JSON nested data

I was pulling in JSON data from Ground Control and was having some trouble pulling in some nested data while creating a record. Here is how I solved it. I hope I can save some people time. Used the method posted here to get this started: JSON Handler details Data initially pulled was not an issue at the top level. Nested inside my data is "customFieldValues". Sample: "customFieldValues": [ { "name": "SomeFieldA", "value": "Abcdefg" }, { "name": "SomeFieldB", "value": "1234567" }, { "name": "SomeFieldC", "value": "CwhatIDidThere?" } ] To pull in this value I needed to use a raw_record Jinja expression and state the location in the array. {{b.raw_record['customFieldValues'][0]['value']}} 0 is used since this is the first location in the Array. (This assumes that b. is the reference used for the other fields such as {{b.status}} ) This is placed in the field reference in Create Record step for SomeFieldA. ------------------------------ James Carlos ------------------------------603Views0likes3CommentsPipelines Advanced Query

The goal is to build an Advanced Query in a Pipeline that will compare two User Fields in the record. I am looking for the Record Owner to match the User in FID 9. The query that will not work is {'4'.EX.'9'} What the query says is Record Owner equals 9 not the value of the User in FID 9. Searching for the syntax to get this right has been fruitless. Anyone know a solution? ------------------------------ Don Larson Paasporter Westlake OH ------------------------------600Views1like10CommentsHow do I use Today() in a Pipeline Query line?

I have a pipeline that I need to run every morning and based on several conditions update a set of data. One of the data points is the Effective Date. I don't have the option to drag the value from a field and I need the condition to look at all records from the previous day (the pipeline is scheduled to run at 1:00 AM each morning). The only options I see in the highlighted field is using the calendar and I don't have an option for "today". ------------------------------ Paul Peterson ------------------------------600Views0likes9Comments(Pipelines) Get Users in Role / Process XML from HTTP API

Hello, any help/advice would be much appreciated. I'm trying to send a reminder email to users in a specific Role, in a specific App, using Pipelines. As far as I can tell, there is not a JSON RESTful API call that does this (Get Users only returns all users for an App, with no info on Roles). However, API_UserRoles returns each user from an app with what Roles they have. In theory, I could somehow loop over this and send the email to only those users with a specific role. I can successfully use the Quickbase Channel -> 'Quickbase APIs' -> 'Make Request' step to call API_UserRoles and get this data. Here's where I run into trouble: How do I process this XML into a form that another step could use (e.g. loop over it and send emails)? I found this question: "To capture an XML response from an API in Pipelines" but I can't seem to figure out how get {{a.json.qdbapi.value}}. When I try to view its contents (emailed to myself) it is blank. There isn't any "Result" field or something like that from this request step available in subsequent steps. Only URL, Method, Headers, Content Type, & Body. For instance, if I want to get the JSON out of the XML (using {{a.json.qdbapi.value}}) with the JSON Handler Channel -> 'Iterate over JSON Records', the 'JSON Source' field states 'No json sources in the pipeline' Thank you for any help you can offer, ~Daniel ------------------------------ Daniel ------------------------------572Views0likes8CommentsWhen the Expiration Date is On or Before Today, Change the Contract Status to Expired

Hello: I have a contract application. Goal: When the Contract Expiration Date field is on or before today, and the Exception to the Expiration Date Dynamic Form Rule field is unchecked, I want the Contract Status field to automatically change to Expired. Question: What is the best method to accomplish the above goal, form rule, text formula, or pipeline? 1. I tried the below form rule. However, it works intermittently. I still see expired contracts that show as active 2. I created a formula - Text field named Contract Status Formula, but I am unsure whether my formula is correct. If( Today() <= [Contract Expiration Date], "Expired") 3. I tried to create a pipeline. My first attempt was to Search Records/Update Record. My second attempt was to On New Event/Search Records/Update Record. However, I know I am missing a step. Any step-by-step guidance would be greatly appreciated. Thank you, RoulaSolved502Views1like12CommentsPipeline issue: "invalid literal for Decimal" ???

What the heck does this mean? I can't seem to figure it out. ("1170 Martingale" is the name of the record being modified to trigger the pipeline) I have no idea what the export fields are referring to either. I can't even seem to locate a discussion on here similar to this. Any ideas out there? The pipeline fails to produce the action because of this error. Validation error: Invalid literal for Decimal: u'1170 Martingale' Input (Options) table:"bkcuzgpzg" export_fields:Array[6] 0:"112" 1:"7" 2:"159" 3:"148" 4:"157" 5:"177" Input (Mapping) related_opportunity:"{{a.opportunity}}" related_customer:"{{a.opportunity_contact_full_name}}" scheduled_for:"amanda@getnewclosets.com" schedule_status:"Scheduled" scheduled_date_time:"{{a.updated_at}}" related_company:"{{a.opportunity_company_name}}" activity_type:"Schedule LE" ------------------------------ Thanks, Chris Newsome ------------------------------470Views0likes5Comments